Steve Jurvetson对摩尔定律的分析精辟、深刻,真是洞见,忍不住摘抄如下:

The Moore’s Law Update

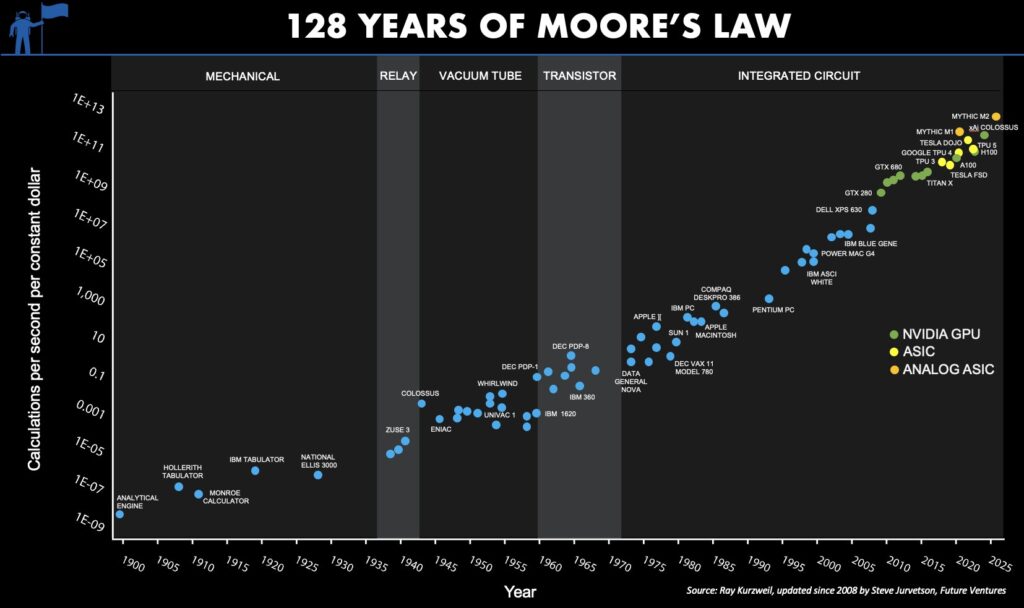

NOTE: this is a semi-log graph, so a straight line is an exponential; each y-axis tick is 100x. This graph covers a 1,000,000,000,000,000,000,000x improvement in computation/$. Pause to let that sink in.Humanity’s capacity to compute has compounded for as long as we can measure it, exogenous to the economy, and starting long before Intel co-founder Gordon Moore noticed a refraction of the longer-term trend in the belly of the fledgling semiconductor industry in 1965.

I have color coded it to show the transition among the integrated circuit architectures. You can see how the mantle of Moore’s Law has transitioned most recently from the GPU (green dots) to the ASIC (yellow and orange dots), and the NVIDIA Hopper architecture itself is a transitionary species — from GPU to ASIC, with 8-bit performance optimized for AI models, the majority of new compute cycles.

There are thousands of invisible dots below the line, the frontier of humanity’s capacity to compute (e.g., everything from Intel in the past 15 years). The computational frontier has shifted across many technology substrates over the past 128 years. Intel ceded leadership to NVIDIA 15 years ago, and further handoffs are inevitable.

Why the transition within the integrated circuit era? Intel lost to NVIDIA for neural networks because the fine-grained parallel compute architecture of a GPU maps better to the needs of deep learning. There is a poetic beauty to the computational similarity of a processor optimized for graphics processing and the computational needs of a sensory cortex, as commonly seen in the neural networks of 2014. A custom ASIC chip optimized for neural networks extends that trend to its inevitable future in the digital domain. Further advances are possible with analog in-memory compute, an even closer biomimicry of the human cortex. The best business planning assumption is that Moore’s Law, as depicted here, will continue for the next 20 years as it has for the past 128. (Note: the top right dot for Mythic is a prediction for 2026 showing the effect of a simple process shrink from an ancient 40nm process node)

For those unfamiliar with this chart, here is a more detailed description:

Moore’s Law is both a prediction and an abstraction. It is commonly reported as a doubling of transistor density every 18 months. But this is not something the co-founder of Intel, Gordon Moore, has ever said. It is a nice blending of his two predictions; in 1965, he predicted an annual doubling of transistor counts in the most cost effective chip and revised it in 1975 to every 24 months. With a little hand waving, most reports attribute 18 months to Moore’s Law, but there is quite a bit of variability. The popular perception of Moore’s Law is that computer chips are compounding in their complexity at near constant per unit cost. This is one of the many abstractions of Moore’s Law, and it relates to the compounding of transistor density in two dimensions. Others relate to speed (the signals have less distance to travel) and computational power (speed x density).

Unless you work for a chip company and focus on fab-yield optimization, you do not care about transistor counts. Integrated circuit customers do not buy transistors. Consumers of technology purchase computational speed and data storage density. When recast in these terms, Moore’s Law is no longer a transistor-centric metric, and this abstraction allows for longer-term analysis.

What Moore observed in the belly of the early IC industry was a derivative metric, a refracted signal, from a longer-term trend, a trend that begs various philosophical questions and predicts mind-bending AI futures.

In the modern era of accelerating change in the tech industry, it is hard to find even five-year trends with any predictive value, let alone trends that span the centuries.

I would go further and assert that this is the most important graph ever conceived. A large and growing set of industries depends on continued exponential cost declines in computational power and storage density. Moore’s Law drives electronics, communications and computers and has become a primary driver in drug discovery, biotech and bioinformatics, medical imaging and diagnostics. As Moore’s Law crosses critical thresholds, a formerly lab science of trial and error experimentation becomes a simulation science, and the pace of progress accelerates dramatically, creating opportunities for new entrants in new industries. Consider the autonomous software stack for Tesla and SpaceX and the impact that is having on the automotive and aerospace sectors.

Every industry on our planet is going to become an information business. Consider agriculture. If you ask a farmer in 20 years’ time about how they compete, it will depend on how they use information — from satellite imagery driving robotic field optimization to the code in their seeds. It will have nothing to do with workmanship or labor. That will eventually percolate through every industry as IT innervates the economy.

Non-linear shifts in the marketplace are also essential for entrepreneurship and meaningful change. Technology’s exponential pace of progress has been the primary juggernaut of perpetual market disruption, spawning wave after wave of opportunities for new companies. Without disruption, entrepreneurs would not exist.

Moore’s Law is not just exogenous to the economy; it is why we have economic growth and an accelerating pace of progress. At Future Ventures, we see that in the growing diversity and global impact of the entrepreneurial ideas that we see each year — from automobiles and aerospace to energy and chemicals.

We live in interesting times, at the cusp of the frontiers of the unknown and breathtaking advances. But, it should always feel that way, engendering a perpetual sense of future shock.

——

摩尔定律更新

注意:这是一个半对数图,因此直线是指数;y 轴上的每个刻度代表 100 倍。此图涵盖了计算/$ 方面 1,000,000,000,000,000,000,000 倍的改进。暂停一下,仔细思考一下。自从我们可以测量以来,人类的计算能力就一直在不断增强,它与经济无关,早在英特尔联合创始人戈登·摩尔 (Gordon Moore) 于 1965 年注意到新兴半导体行业内部长期趋势的折射之前就开始了。

我对其进行了颜色编码,以显示集成电路架构之间的过渡。您可以看到摩尔定律的地幔最近如何从 GPU(绿点)过渡到 ASIC(黄点和橙点),而 NVIDIA Hopper 架构本身就是一个过渡物种——从 GPU 过渡到 ASIC,具有针对 AI 模型优化的 8 位性能,占据了大多数新的计算周期。

人类计算能力的边界线下方有成千上万个看不见的点(例如,英特尔在过去 15 年中取得的一切)。在过去 128 年中,计算边界已在许多技术基底上发生转变。英特尔 15 年前将领导权让给了 NVIDIA,进一步的交接不可避免。

为什么在集成电路时代发生转变?英特尔在神经网络方面败给了 NVIDIA,因为 GPU 的细粒度并行计算架构更能满足深度学习的需求。针对图形处理优化的处理器与感觉皮层的计算需求之间的计算相似性具有诗意般的美感,这在 2014 年的神经网络中很常见。针对神经网络优化的定制 ASIC 芯片将这一趋势延伸到数字领域的必然未来。模拟内存计算有可能取得进一步的进展,这是一种更接近人类皮层的仿生技术。最好的商业规划假设是,摩尔定律(如此处所示)将在未来 20 年继续发挥作用,就像过去 128 年一样 (注意:Mythic 右上角的点是对 2026 年的预测,显示了从古老的 40nm 工艺节点简单工艺缩减的影响)

对于那些不熟悉此图表的人来说,这里有更详细的描述:

摩尔定律既是一个预测,又是一个抽象。据普遍报道,摩尔定律是指晶体管密度每 18 个月翻一番。但英特尔联合创始人戈登摩尔从未说过这样的话。这是他两个预测的完美结合。1965 年,他预测最具成本效益的芯片中晶体管数量每年翻一番;1975 年,他将其修改为每 24 个月翻一番。大多数报告都略带含糊地将 18 个月归功于摩尔定律,但其中存在相当大的可变性。对摩尔定律的普遍看法是,计算机芯片的复杂性在不断增加,而单位成本却几乎保持不变。这是摩尔定律的众多抽象概念之一,它与二维晶体管密度的增加有关。其他抽象概念与速度(信号传输距离更短)和计算能力(速度 x 密度)有关。

除非您就职于芯片公司并专注于晶圆厂产量优化,否则您不会关心晶体管数量。集成电路客户不会购买晶体管。技术消费者购买的是计算速度和数据存储密度。从这些方面重新表述时,摩尔定律不再是以晶体管为中心的指标,这种抽象概念允许进行更长期的分析。

摩尔在早期集成电路行业的核心部分观察到的是一个衍生指标,一个来自长期趋势的折射信号,这个趋势引发了各种哲学问题,并预测了令人难以置信的人工智能的未来。

在科技行业加速变革的现代,很难找到具有预测价值的哪怕是五年的趋势,更不用说跨越几个世纪的趋势了。

我还要进一步断言,这是有史以来最重要的图表。大量且不断增长的行业依赖于计算能力和存储密度成本的持续指数下降。摩尔定律推动了电子、通信和计算机的发展,并已成为药物发现、生物技术和生物信息学、医学成像和诊断的主要驱动力。随着摩尔定律跨越关键门槛,以前实验室的反复试验科学变成了模拟科学,进步的速度急剧加快,为新行业的新进入者创造了机会。想想特斯拉和 SpaceX 的自主软件堆栈以及它对汽车和航空航天领域的影响。

地球上的每个行业都将成为信息行业。以农业为例。如果你在 20 年后问农民他们如何竞争,这将取决于他们如何使用信息——从推动机器人田间优化的卫星图像到种子中的代码。这与工艺或劳动力无关。随着 IT 主导经济,这最终将渗透到每个行业。

市场的非线性变化对于创业和有意义的变革也至关重要。技术的飞速发展一直是持续颠覆市场的主要力量,为新公司带来了一波又一波的机会。没有颠覆,企业家就不会存在。

摩尔定律不仅仅是经济的外生因素,也是我们经济增长和进步步伐加快的原因。在 Future Ventures,我们看到每年都有越来越多的创业理念变得多元化,并在全球产生影响——从汽车和航空航天到能源和化学品。

我们生活在一个有趣的时代,处在未知和惊人进步的前沿。但是,这种感觉应该一直存在,并产生一种对未来的永久震撼感。