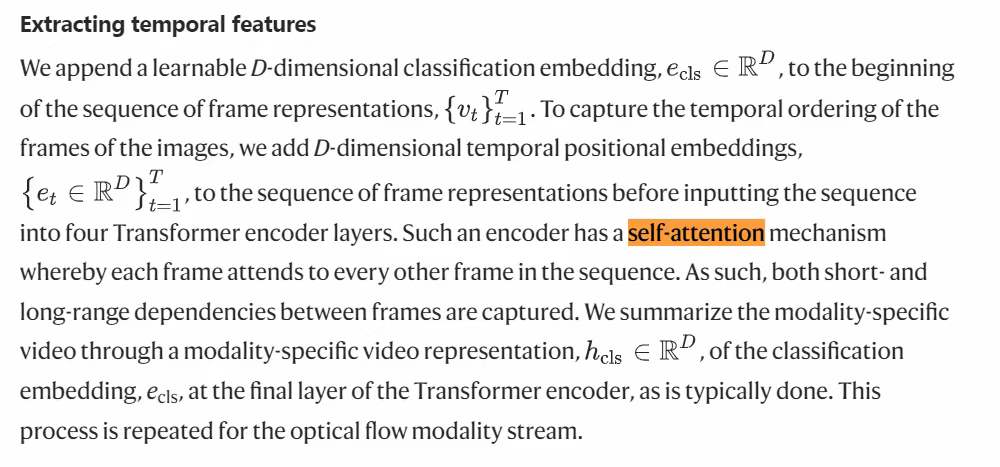

Transformer的自注意力机制(self-attention),其背后是理性和逻辑。时序数据中如果天然隐含理性路径,此时在AI模型设计引入自注意力机制,可能会有更好的结果输出。比如这个机器人辅助外科手术的视频分析AI建模引入self-attention,其前提是规范、优秀的手术过程内含“理性”。

The self-attention mechanism of the Transformer is rooted in rationality and logic. If time-series data inherently follows a rational path, introducing self-attention into AI model design could potentially improve outcomes. For instance in this paper, incorporating self-attention in AI models for video analysis of robot-assisted surgeries presupposes that the surgery process, being standardized and exemplary, embodies “rationality.”

authors: Dani Kiyasseh, etc., Source: nature.com