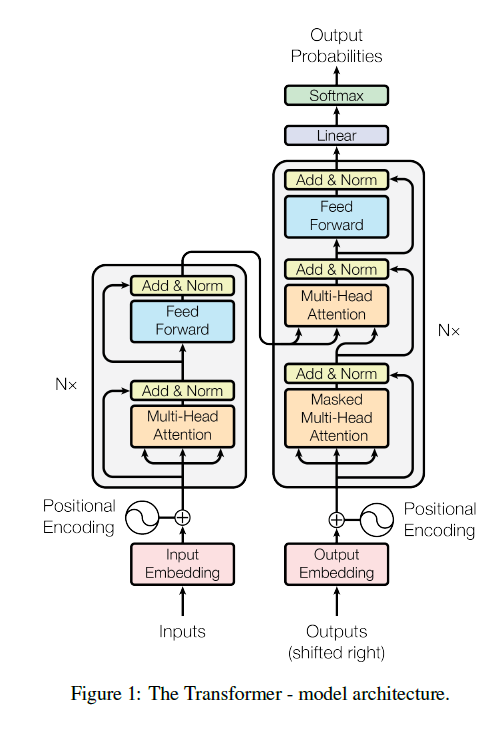

1. 第一当然是论文Attention Is All You Need了。经典论文,必看。

2.李沐博士对论文Attention Is All You Need的讲解视频:Transformer论文逐段精读。

3.《动手学深度学习》,李沐博士也是作者之一。

4.AI大神Andrej Karpathy手把手讲解一个nanoGPT代码:Let’s build GPT: from scratch, in code, spelled out.

代码所在的Google Colab文件:https://colab.research.google.com/drive/1JMLa53HDuA-i7ZBmqV7ZnA3c_fvtXnx-?usp=sharing

Andrej Karpathy对Attention的解释很经典:’Attention is a communication mechanism. Can be seen as nodes in a directed graph looking at each other and aggregating information with a weighted sum from all nodes that point to them, with data-dependent weights.‘

5.Tensorflow官网上的Transformer教程。

6. Drawing the Transformer Network from Scratch,作者Thomas Kurbiel。可视化动态展示。

7.Transformers Explained Visually,作者Ketan Doshi。这一系列四篇文章对Transformer的解释很到位,推荐指数5颗星。